FeatureSmith: Automatically Engineering Features for Malware Detection by Mining the Security Literature

High-level

Summary:

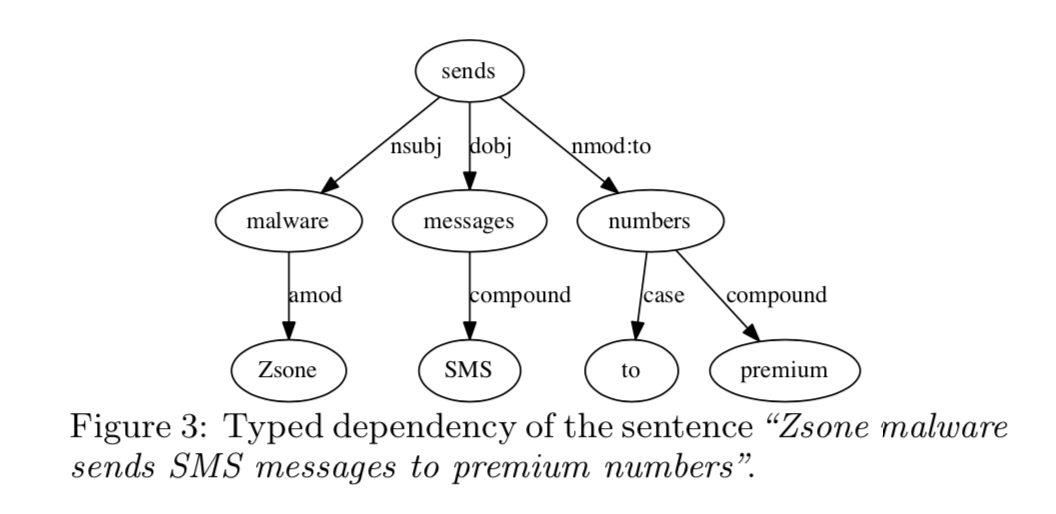

We propose an end-to-end approach for automatic feature engineering. We describe techniques for mining documents written in natural language (e.g. scientific papers) and for representing and querying the knowledge about malware in a way that mirrors the human feature engineering process. Specifically, we first identify abstract behaviors that are as- sociated with malware, and then we map these behaviors to concrete features that can be tested experimentally. We im- plement these ideas in a system called FeatureSmith, which generates a feature set for detecting Android malware. We train a classifier using these features on a large data set of benign and malicious apps.

Evaluation:

This classifier achieves a 92.5% true positive rate with only 1% false positives, which is com- parable to the performance of a state-of-the-art Android malware detector that relies on manually engineered fea- tures. In addition, FeatureSmith is able to suggest informa- tive features that are absent from the manually engineered set and to link the features generated to abstract concepts that describe malware behaviors.

Takeaways:

Drebin [8], a state of the art system for detecting Android malware, takes into account 545,334 features from 8 di↵erent classes.

We found that some benign apps from the Drebin ground truth are labeled as malicious by some VirusTotal products

Additionally, anecdotal evidence suggests that adversaries may intentionally poison raw data

We evaluate the contribution of individual features to the classifier’s performance by using the mutual information metric

Practical Value

What you can learn from this to make your research better?

Why not ming the threat reports?

The semantic network used in this work can be trivially transformed into a graph-based signature.

Details and Problems From the presenters’ point of view, what questions might audience ask?

How large is the evaluation dataset?

For our experimental evaluation, we utilize malware sam- ples from the Drebin data set [8], shared by the authors. This data set includes 5,560 malware samples, and also pro- vides the feature vectors extracted from the malware and from 123,453 benign applications. While these feature vec- tors define values for 545,334 features, FeatureSmith can discover additional features, not covered by Drebin. We therefore extract these additional features from the apps. We obtain the expanded feature vectors for 5,552 malware samples and 5,553 benign apps.6 The collected applications exhibit 43,958 out of 545,334 Drebin features and 133 out of 195 features generated by FeatureSmith. Note that we use the malware samples only for the evaluation in Section 4; the feature generation utilizes the malware names and the document corpus.

How well can this tool generalize?

Wait, how automatic this tool can be?

Baseline ranking method: We extract all the API calls, intents and permissions mentioned in our paper corpus, whether they are related to malware or not, and we rank them by how often they are mentioned. This term frequency (TF) metric is commonly used in text mining for extracting frequent key- words.